7.2A Different Cost function: Logistic Regression

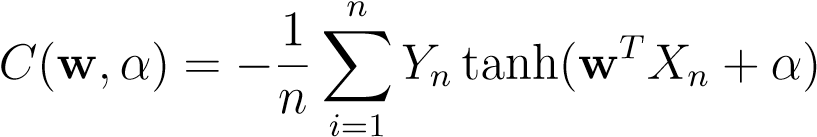

The cost function of Eq. 7.2 penalizes gross violations of ones predictions rather severely (quadratically). This is sometimes counter-productive because the algorithm might get obsessed with improving the performance of one single data-case at the expenseof all the others. The real cost simply counts the number of mislabelled instances, irrespective of how badly off you prediction functionwTXn+_α_was. So, a different function is often used,

(7.10)

(7.10)

The functiontanh(·)is plotted in figure??. It shows that the cost can never be larger than2which ensures the robustness against outliers. We leave it to the reader to derive the gradients and formulate the gradient descent algorithm.